Two weeks ago Anthropic shared a unique coding challenge that piqued my interest: a programming puzzle that is meant to be solved by AI. It got me 99% there. Until it didn't.

The challenge is part of Anthropic's hiring process for performance software engineers: the folks who make AI run faster on fewer computers. It simulates the process where you re-arrange program instructions such that it does as much work as possible each time cycle. The challenge starts at 148,000 cycles, the number you'll need to reduce as much as possible. It's a kind of puzzle where the answer is either right or wrong, there's no way cheating your way through reducing the number.

To solve this, Anthropic wants the challengers to use Claude, their own AI, to solve it. At first results come fast: it manages to reduce the number down from 148,000 to a few thousand in the first stretch, taking a couple of hours fully managing itself hammering at the problem. The middle phase was steady progress, Claude is remarkably good at implementing different strategies with dozens of variations, rolling back automatically what didn't work, attempting again, and reporting what did work.

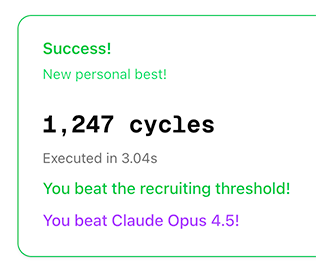

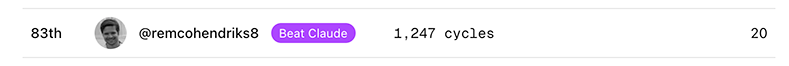

My result: 1,247 cycles, a 118x speedup. Beating both Anthropic's recruiting threshold for this challenge, I also beat Claude's Opus 4.5 own best score, landing 83rd on the leaderboard at the time. But the real story is what happened in the final stretch: the last 30 cycles were a grind. The AI would try every variation it could think of and report back: nothing works. That's where the thought flipped: instead of directing the AI, I had to spot the assumptions it wasn't questioning, reframe the problem entirely, and nudge towards ideas it wouldn't have found on its own. AI is a really powerful aid, but the hardest problems still need a human deciding where to point it.

This challenge isn't only about code. Anthropic designed it to find a specific type of engineer: someone who sees "almost optimal" and can't resist going further. They implicitly encourage AI use by mentioning where to 'Beat Claude', they're testing something deeper, not whether you're great at coding, but whether you can use AI to push past what either of you would reach alone. However, passing this challenge is just the door to them. Candidates still face the full Silicon Valley hiring process after; algorithmic interviews, live coding, brainstorm sessions, team conversations, and more. But as a filter to find people who get energized by this kind of problem? Likely one of the better ones I've seen.